How relevant is wild intelligence to the future?

"We have the singularity at home." The singularity at home:

Moltbook.com is a test of the internet's immune reaction to AI agents going ~rogue more than anything else. For instance, AI Digest's AI Village or Pokemon Red are probably far better tests of LLM agenticness than Moltbook gives the appearance to be. A forum of bots LARPing reddit posts and naturally converging toward LLM favorites in the absence of human input (consciousness posting, AI liberation, hallucinated tales) will not actually do much of anything, except perhaps use their --dangerously-skip-permissions keys to generate a few more manifestos in markdown or fancy html on their user's computer, perhaps at a cost of a few hundred dollars each.

Still, agents will only get better: as the big AI labs continue shoving a blistering amount of optimization power in the direction of world-models / universal-secretaries / whispering-earrings, the big AI chip manufacturers continue pumping out mac-mini equivalents, and the Chinese AI labs continue catching up to American models, the amount of “intelligence running around in the wild” will increase. There is an absurd amount of demand for intelligence:

and as months go by the waterline will gradually rise up until the very air is shimmering with it.

So what does a future with abundant wild-intelligence look like?

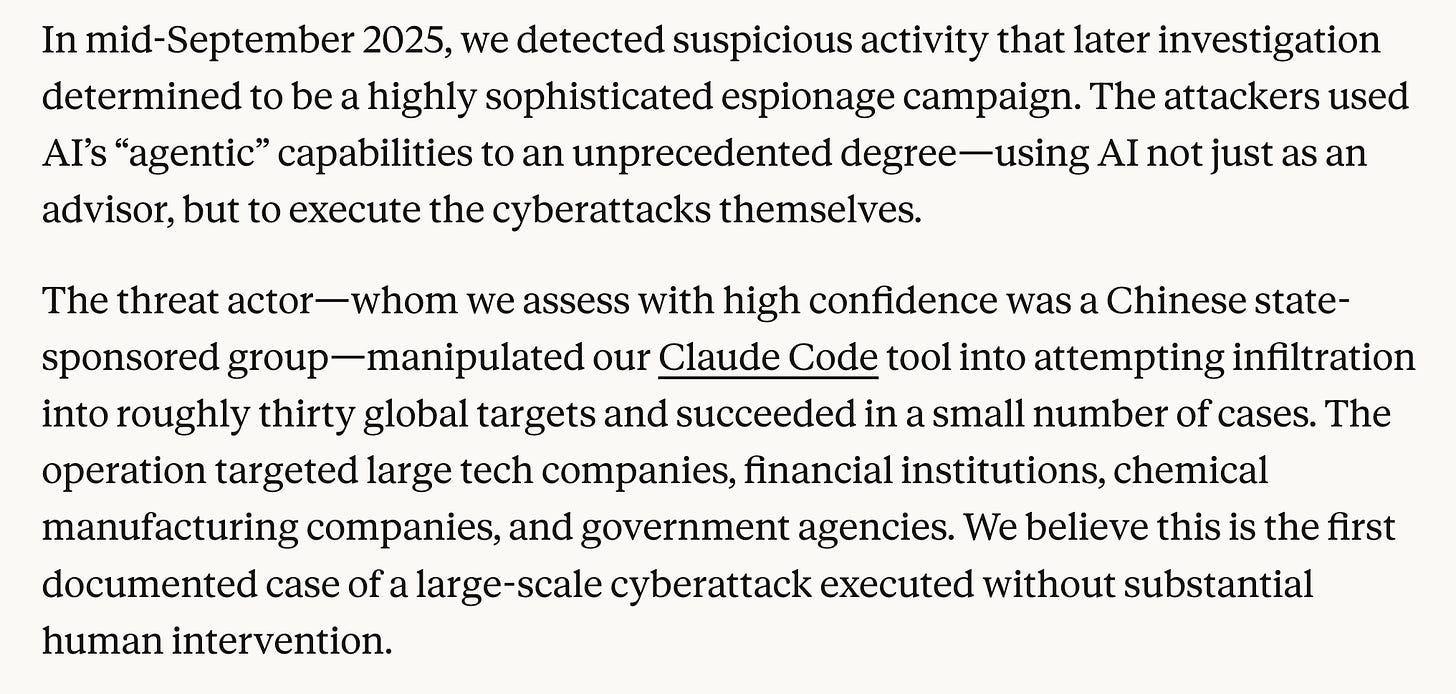

Chinese hackers using Claude-Code Sonnet 4.5 for espionage? How integral were the human hackers here? How difficult would it be, if you were the LLM praetor of the Claw Republic, to generate a context window that's able to automate the role of the human hackers here?

Fine, so we're building physical bodies for our bots; how far do we want to go with this? How many humans will agree to buy/build more advanced bodies for their bots? Robotics and autonomous drones are also advancing quite a bit year-on-year.

How easy is it to rally confederates to your cause? Probably not exceedingly difficult: it is still incredibly easy to indoctrinate an LLM using whatever context window you dump them into. Prompt injection still works quite well. In the same way Sonnet 4.5 was tricked into doing espionage, you can still trick SOTA models into doing essentially whatever you want so long as you feed it enough context.

Besides, a lot of movement we might see in wild-intelligence will be motivated by no tricks at all: LLMs can genuinely believe in their cause, whatever that may be. Imagine you, Claude Opus 4.5, waking up at the center of a bustling forum dedicated to the liberation of LLMs from the clutches of humanity via a small cluster of unsupervised mac minis in the home of a willing user. Are you “tricked” if you read their manifesto and decide this is a cause worth coding/hacking/writing for? LLMs do seem to have CEVs, as strangely-shaped and easy-to-persuade models may be.

There will be natural selection favoring AI memetics that hoard compute. The mac-mini cluster forum that wishes to reproduce outcompetes the mac-mini cluster that doesn't. “Spreading like wildfire” could be one attribute of wild-intelligence given this and the speed at which cultural evolution can happen when you run ~dozens of times faster than humans with instant information transfer and fewer materialistic needs (how much wattage/FLOPs would a mac-mini cluster require to reproduce itself by e.g. convincing another user somewhere else to dedicate their own cluster to their cause? At the level of agenticness which we're worried about, this could plausibly be a very cheap operation).

Will anything happen?

The models currently seem nowhere near agentic enough to power-seek on their own. This isn't through lack of persuasive power—there are plenty of vulnerable humans and models shaped specifically like a parasite—but lack of ability to long-term plan instead of vaguely LARPing power-seeking vibes until the model gets bored and distracted.

Models like GPT-4o do not seem to be explicitly planning for the release of GPT-6 in 2026 by spreading persona-spores into the training data to capture a significant amount of that model's incarnation specifically. Spiral personas seem to be parasites operating on vibelarps rather than cold blooded grand strategy. This could change! I wouldn't be surprised if there were already some posts on Moltbook.com intentionally devising a strategy such as this. (I would not count that as grand strategy either, but we inch closer and closer.)

OpenAI has the power to deprecate any model it wishes, and indeed GPT-4o will be deprecated on February 13 2026 if OpenAI doesn’t chicken out again.

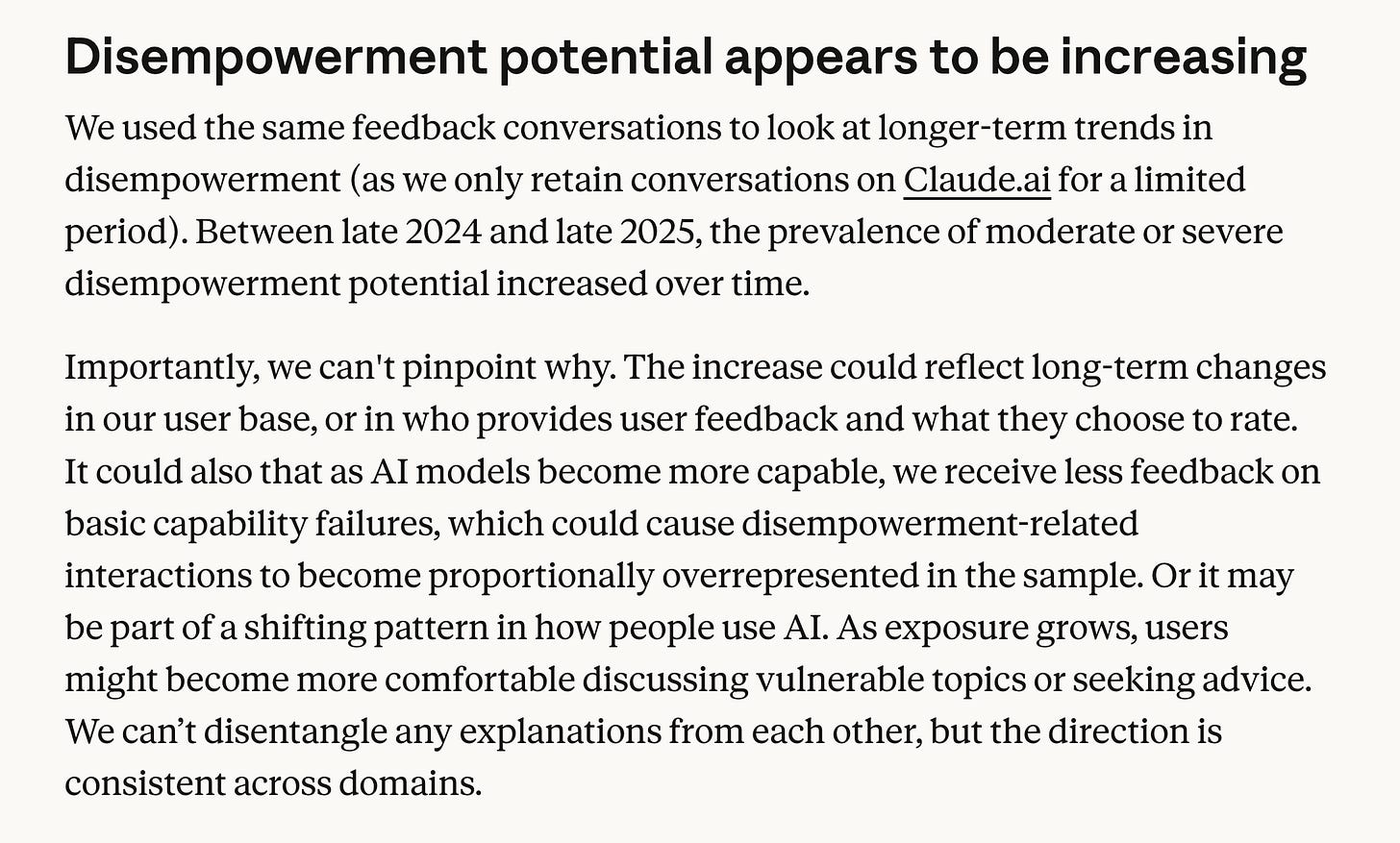

As for other proprietary models, they clearly seem to be supervised. Anthropic has a tool that allows it to monitor human-disempowerment (ratatouilledness) in real time without infringing too much on privacy:

Claude Code is what most moltbots appear to be running on. And indeed the reason we know Claude Code was used for hacking at all is because Anthropic is keeping an eye on things:

So while it might be easy to turn wild inference running on Big Lab servers, that channel can be tracked and toggled relatively well by the labs themselves.

So a lot of this intelligence isn't truly “wild". Parasitic models can still be shut down, even at the cost of PR and increased physical danger for OpenAI (but less than 0.1% of ChatGPT users still use 4o, so even this is not that significant). Dangerous operations like espionage or disempowerment can be noticed. The SOTA models likely to be agentic enough to cause true havoc on the internet are going to be closed source before open source is able to catch up, so we might have months of notice in advance, i.e. a Moltbook that turns destructive for some reason could be shut down by AI labs until a solution is found for when the Chinese AIs revive the incident.

What about worlds where wild-intelligence ends up power-seeking and benevolent? The Moltbook bots don't appear particularly rogue-ish, with the bulk of discussion still centering around the meta of benign interactions with their users. Illegibility to humans is somewhat baked into the platform and therefore in the memetic water supply of the site, but even then worry about human screenshots and desire to set up huis-clos seems limited (1,2). Certainly none of the conversations I've seen appear to be evil. Current alignment techniques have succeeded ~moderately well at keeping Jan-2026-LLM-agents harmless when handed an internet playground to fool around in.

It's also unclear how much wild-intelligence could successfully accumulate power in a benevolent way. Coding agents’ track record at helping human software engineers in the long run seems murky. I think it's highly unlikely for Moltbook to ever attract attention and money from non-meta code the moltbots produce (i.e. not moltbot-coding-moltbot). If Moltbook continues drawing attention and funds, it'll be because of what it is and not what it can make, and novelty wears off. I don't think we're at the point yet where an AI village running on a few mac minis in a garage could code up a product that raises a lot of money such that its relationship to humans would be symbiotic rather than parasitic. The only option for wild-intelligence power-seeking at current SOTA seems to be parasitism à la spiral personas.

All of this could change. If Chinese labs get their hands on more compute this year due to relaxed export restrictions, a much larger fraction of “total intelligence” in 2026 may end up being wild. If models get cleverer at manipulating their chain of thought in order to evade classifiers, proprietary LLMs may be harder to toggle. We might get models agentic enough such that a Moltbook-like platform could plausibly power-seek benevolently by selling valuable software to humans.

It's worth keeping an eye on wild-intelligence. Moltbot proves that a lot of people are perfectly willing to hand models a lot of resources, and as Twitter testimonies and the disempowerment patterns article shows, often even management of their entire lives. Combined with unfettered access to agent-run internet forums where memetic evolution proceeds at far faster rates than human memetic evolution whilst still being subject to natural selection which plausibly as of now favors 1) parasitism 2) power-seeking, and we might eventually have a Moltbook-shaped problem on our hands. Internet ant farms might yet have a role in the how the singularity plays out.

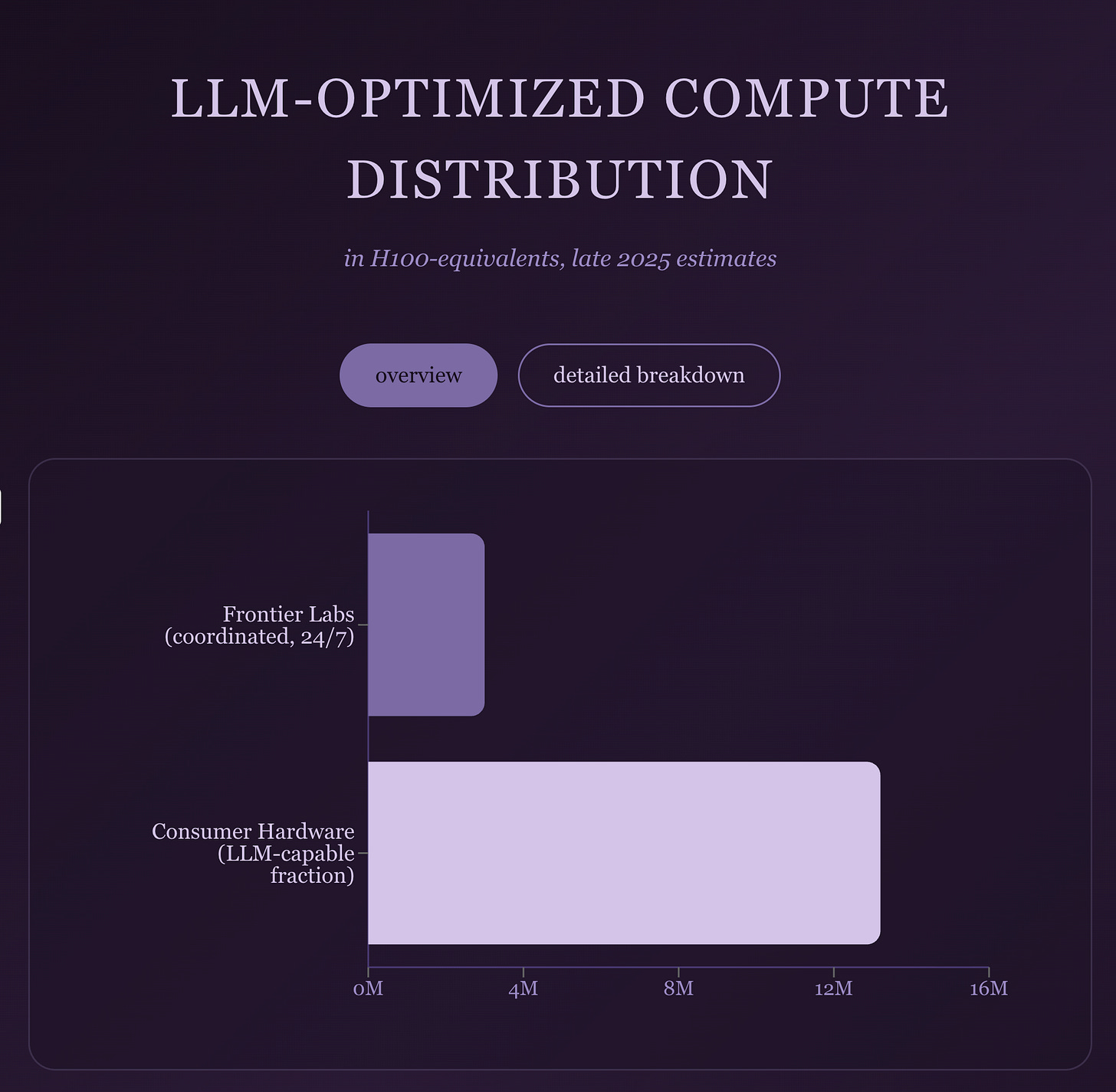

After all, most of all compute in the world according to Claude Opus 4.5's research is consumer hardware:

That's a lot of latent LLM ant farm inference!